TiDB Lightning Tutorial

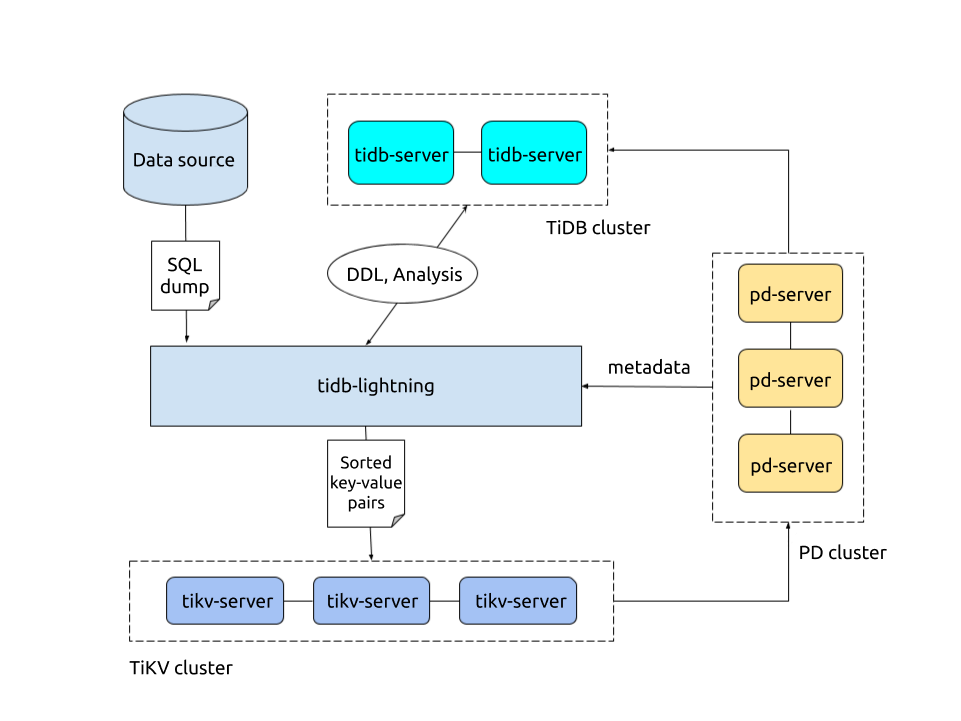

TiDB Lightning is a tool used for fast full import of large amounts of data into a TiDB cluster. Currently, TiDB Lightning supports reading SQL dump exported via SQL or CSV data source. You can use it in the following two scenarios:

- Import large amounts of new data quickly

- Back up and restore all the data

Prerequisites

This tutorial assumes you use several new and clean CentOS 7 instances. You can use VMware, VirtualBox or other tools to deploy a virtual machine locally or a small cloud virtual machine on a vendor-supplied platform. Because TiDB Lightning consumes a large amount of computer resources, it is recommended that you allocate at least 16 GB memory and CPU of 32 cores for running it with the best performance.

Prepare full backup data

First, use dumpling to export data from MySQL:

./bin/dumpling -h 127.0.0.1 -P 3306 -u root -t 16 -F 256MB -B test -f 'test.t[12]' -o /data/my_database/

In the above command:

-B test: means the data is exported from thetestdatabase.-f test.t[12]: means only thetest.t1andtest.t2tables are exported.-t 16: means 16 threads are used to export the data.-F 256MB: means a table is partitioned into chunks and one chunk is 256 MB.

After executing this command, the full backup data is exported to the /data/my_database directory.

Deploy TiDB Lightning

Step 1: Deploy TiDB cluster

Before the data import, you need to deploy a TiDB cluster (later than v2.0.9). In this tutorial, TiDB v4.0.3 is used. For the deployment method, refer to TiDB Introduction.

Step 2: Download TiDB Lightning installation package

Download the TiDB Lightning installation package from the following link:

Step 3: Start tidb-lightning

Upload

bin/tidb-lightningandbin/tidb-lightning-ctlin the package to the server where TiDB Lightning is deployed.Upload the prepared data source to the server.

Configure

tidb-lightning.tomlas follows:[lightning] # logging level = "info" file = "tidb-lightning.log" [tikv-importer] # Uses the Local-backend backend = "local" # Sets the directory for temporarily storing the sorted key-value pairs. # The target directory must be empty. sorted-kv-dir = "/mnt/ssd/sorted-kv-dir" [mydumper] # Local source data directory data-source-dir = "/data/my_datasource/" # Configures the wildcard rule. By default, all tables in the mysql, sys, INFORMATION_SCHEMA, PERFORMANCE_SCHEMA, METRICS_SCHEMA, and INSPECTION_SCHEMA system databases are filtered. # If this item is not configured, the "cannot find schema" error occurs when system tables are imported. filter = ['*.*', '!mysql.*', '!sys.*', '!INFORMATION_SCHEMA.*', '!PERFORMANCE_SCHEMA.*', '!METRICS_SCHEMA.*', '!INSPECTION_SCHEMA.*'] [tidb] # Information of the target cluster host = "172.16.31.2" port = 4000 user = "root" password = "rootroot" # Table schema information is fetched from TiDB via this status-port. status-port = 10080 # The PD address of the cluster pd-addr = "172.16.31.3:2379"After configuring the parameters properly, use a

nohupcommand to start thetidb-lightningprocess. If you directly run the command in the command-line, the process might exit because of the SIGHUP signal received. Instead, it's preferable to run a bash script that contains thenohupcommand:#!/bin/bash nohup ./tidb-lightning -config tidb-lightning.toml > nohup.out &

Step 4: Check data integrity

After the import is completed, TiDB Lightning exits automatically. If the import is successful, you can find tidb lightning exit in the last line of the log file.

If any error occurs, refer to TiDB Lightning FAQs.

Summary

This tutorial briefly introduces what TiDB Lightning is and how to quickly deploy a TiDB Lightning cluster to import full backup data to the TiDB cluster.

For detailed features and usage about TiDB Lightning, refer to TiDB Lightning Overview.